We use machine learning technology to do auto-translation. Click "English" on top navigation bar to check Chinese version.

Cross-account Amazon Aurora MySQL migration with Aurora cloning and binlog replication for reduced downtime

Migrating your relational databases on

In this post, we discuss the steps and best practices to perform the initial data load for this type of migration, followed by configuring binary log replication to reduce downtime, and steps to cut over. Although this post focuses on in-region migration steps of an Aurora MySQL-Compatible database (DB) cluster from one Amazon Web Services account to another Amazon Web Services account, you can also utilize this solution for the following use cases:

-

Migrate Aurora MySQL to another

Amazon Virtual Private Cloud (Amazon VPC) in an existing Amazon Web Services account. - Migrate Aurora MySQL to another subnet group in same or different Amazon VPC

Solution overview

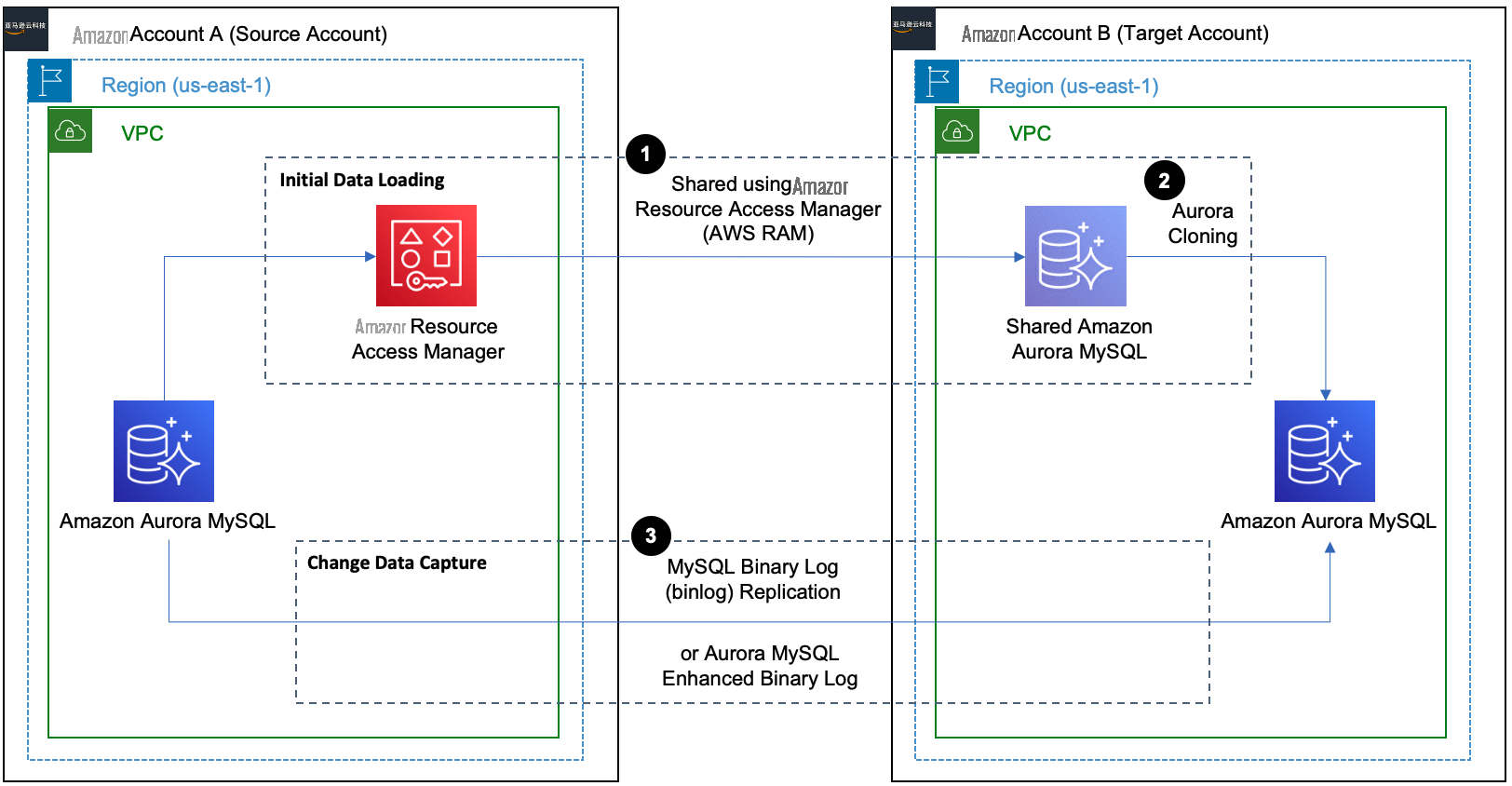

This solution involves two phases: initial data load and ongoing replication (change data capture).

Phase 1: Initial data load

There are multiple options to perform the initial data load, such as the following:

-

Database Cloning: For Aurora MySQL, you can use

Aurora cloning . Aurora cloning is recommended for cross-account migration if source and target Amazon Web Services accounts are in same Amazon Web Services region. -

Snapshot Restore:

Restoring from a DB cluster snapshot for Aurora MySQL. This approach is recommended for cross-account migration if source and target Amazon Web Services accounts are in different Amazon Web Services regions. -

Logical restore: Use a native or third-party database migration method such as

mysqldump ormydumper/myloader .

In this post, we use Aurora cloning to perform the initial data load for Aurora MySQL DB cluster because this is a fast and cost-effective method compared to other approaches.

Phase 2: Change data capture

You can use MySQL

If you are using

Architecture overview

In this post, we use

To implement this solution, we complete the following steps:

- Configure binary logs

- Perform the initial data load

- Create a replication user on the source

- Configure binary log replication for change data capture (CDC)

- Monitor replication

- Perform cutover

For this demonstration, the source Amazon Web Services account is

6144xxxxxxxx

and the target account is

2634xxxxxxxx

. The source Aurora MySQL DB cluster is named

aurora-mysql-source-01

and the target cluster is

aurora-mysql-target-01

, using version Amazon Aurora MySQL-Compatible Edition version 3 (with MySQL 8.0 compatibility).

Note that launching new resources in your Amazon Web Services account incurs additional costs.

Prerequisites

Before you get started, make sure you complete the following prerequisites:

-

Set up source and target Amazon Web Services accounts network connectivity using either

VPC peering orAmazon Web Services Transit Gateway . For more information, seeCreate a VPC peering connection . -

An Amazon VPC security group with inbound rules to allow connections from the source to target. For more information, see

Control traffic to resources using security groups . -

Latest version of the

Amazon Web Services CLI installed and configured on your host such as EC2, Cloud9 or similar instance.

Migration for Aurora MySQL

1. Set up Binary log replication

Before you start the initial data load, binary log replication must be active and capturing binlogs. The binlogs can then be used for continuous replication. Aurora MySQL supports the row-based, statement-based, and mixed binary logging formats. By default, binary logs are disabled on Aurora MySQL and do not need to be enabled unless data is being replicated out of the Aurora cluster. In order to enable binlogs, set the

binlog_format

parameter to

ROW

,

STATEMENT

, or

MIXED

in the custom DB cluster parameter group attached to the source DB cluster. Since

binlog_format

is a static parameter; you must reboot the writer DB instance of your cluster for the change to take effect. For more information on enabling Aurora MySQL binary logging, refer to

To verify the status of binary logging use the following command in your source Aurora MySQL database:

You can also consider enabling enhanced binlog that reduces the compute performance overhead caused by turning on binlog. To reduce overhead, enhanced binlog writes the binary and transactions logs to storage in parallel, which minimizes the data written at the transaction commit time. The enhanced binlog is available on Aurora MySQL 3.03.1 version (compatible with MySQL 8.0.26) and higher. For additional information, refer to

2. Configure binary log retention

While setting up ongoing replication, we need to ensure that binary logs on the primary Aurora MySQL instance are being retained long enough for change data capture. To set the binary log retention time frame, use the

binlog retention hours

along with the number of hours to retain binlog files on the DB cluster. The maximum value for Aurora MySQL 3.x, Aurora MySQL version 2.11 and above is 90 days. The default value of

binlog retention hours

is

NULL

. For Aurora MySQL,

NULL

means binary logs are not retained.

You should determine the binary log retention hours based few key factors like size of the Aurora MySQL DB cluster, the time it would take in Aurora cloning and binary log replication start timings after aurora cloning. In this post, you use the following code to configure binary log retention period to 3 days on Aurora MySQL:

3. Perform the initial data load

Performing the initial data load for Aurora MySQL consists of several steps like using Amazon Web Services Resource Access Manager (Amazon Web Services RAM) to securely share DB cluster with other account and then setting up Aurora cloning in the target account.

3.1 Allow target Amazon Web Services account to clone DB cluster

To create the clone of the source Aurora MySQL DB cluster in the target Amazon Web Services account, you need to grant permission to target Amazon Web Services account. Use

In this post, you use following Amazon Web Services RAM CLI command

Next, use Amazon Web Services RAM CLI command

After listing all the pending invitations, use Amazon Web Services RAM CLI command

resourceShareInvitationArn

received in previous command :

You will also share the

3.2 Create the target Aurora MySQL DB cluster using an Aurora clone

After you have successfully shared the Aurora MySQL DB cluster with the target account, create an

Note: The source DB cluster remains available for all transactions during the cloning process with no impact to performance.

In this post, you use Amazon Web Services RDS CLI command

The

restore-db-cluster-to-point-in-time

Amazon Web Services CLI command restores the DB cluster only. Use following command to check the cloning status:

Once the cluster is in

available

status, you can create a writer instance by invoking the Amazon Web Services RDS CLI command

aurora-mysql-target-01

.

4. Create a replication user on the source database

Create a user ID on the source Aurora MySQL database solely for replication with

REPLICATION CLIENT

and

REPLICATION SLAVE

privileges. See the following code as an example:

For more details on the parameters, refer to

5. Configure ongoing replication

Connections handling binary log replication are secure as they never leave the Amazon Web Services network because both the source and target databases are in private subnets, and the source and target accounts are peered with a VPC peering connection. To initiate replication, let’s see how we can retrieve the required binary log details.

Get the binary log file name and position by calling the

describe-events

command with an output snippet:

Save the binary log file name (in this case we have used

mysql-bin-changelog.000013

and position

77991983

values) to start replication from the source Aurora MySQL DB cluster. Now connect to the target Aurora MySQL DB cluster using the writer endpoint to start replication from the source Aurora MySQL by calling the

In this post, we use following to configure and start the replication:

Starting with Aurora MySQL version 3, Data Control Language (DCL) statements such as CREATE USER, GRANT and REVOKE are no longer replicated with the binary log replication. If you plan to run any DCL statements while replication is ongoing, you will need to run DCL statements on both the source and target databases.

6. Monitor replication

To monitor how far the target DB is behind the source DB, connect to the target DB instance and run the

SHOW REPLICA STATUS

(Aurora MySQL version 3) or

SHOW SLAVE STATUS

(Aurora MySQL version 2). In the following command output, the

Seconds_Behind_Source

field tells you how far the target DB instance is behind the source:

7. Perform cutover

Once the replica lag is a near zero value, you are ready for cutover to point the application to the Aurora MySQL DB cluster in the target account. We recommend planning your cutover during a low traffic window and following your in-house business cutover checklist and processes. The following are key steps for the cutover process:

- Stop accepting connections on the source database. You can update the VPC security group associated with the source by removing inbound rules that allow connectivity.

- Make sure replication lag from the source to the target DB instance is 0.

-

Stop binary log replication by calling the

mysql.rds_stop_replication procedure.

- Update the application configuration or DNS CNAME record with the target database endpoints.

- You can also set up replication from new Aurora MySQL cluster in the target account to old cluster using binary log replication to address fallback requirements before starting the application with a new target.

- Start your application with the target Aurora MySQL database.

Clean up

After the successful cutover and application is connected to the Aurora MySQL cluster in the target account, you may decide to temporarily stop or delete the source Aurora MySQL cluster. For more information to stop the Aurora cluster, refer

Conclusion

In this post, we’ve discussed the various steps involved to migrate your Aurora MySQL cluster from one Amazon Web Services account to another Amazon Web Services account while setting up binary log replication to reduce the downtime. With the complexity involved in database migrations, we highly recommend testing the migration steps in non-production environments prior to making changes in production. If you are planning to use

About the Authors

Alok Srivastava

is a Senior Database Consultant at Amazon Web Services. He works with customers and partners in their journey to the Amazon Web Services Cloud with a focus on database migration and modernization programs.

Alok Srivastava

is a Senior Database Consultant at Amazon Web Services. He works with customers and partners in their journey to the Amazon Web Services Cloud with a focus on database migration and modernization programs.

Shagun Arora

is a Database Specialist Solutions Architect at Amazon Web Services. She works with customers to design scalable, highly available, and secure solutions in the Amazon Web Services Cloud.

Shagun Arora

is a Database Specialist Solutions Architect at Amazon Web Services. She works with customers to design scalable, highly available, and secure solutions in the Amazon Web Services Cloud.

The mentioned AWS GenAI Services service names relating to generative AI are only available or previewed in the Global Regions. Amazon Web Services China promotes AWS GenAI Services relating to generative AI solely for China-to-global business purposes and/or advanced technology introduction.