We use machine learning technology to do auto-translation. Click "English" on top navigation bar to check Chinese version.

Optimize costs by moving your self-managed open source software (OSS) Redis workloads to Amazon ElastiCache

In this blog post, we explain why you should consider moving your self-managed open source software (OSS) Redis workloads to managed

Developers often opt for self-managed OSS Redis deployments due to the flexibility and control it provides by hosting OSS Redis on their own infrastructure. As application usage and adoption grows, scaling and sharding become vital to ensure resiliency and avoid performance degradation. Initially, a simple cache could be deployed on a single medium sized server. However, as the dataset size grows to tens of gigabytes and the throughput requirements increase, developers are required to set up an OSS Redis cluster to handle throughput with read replicas and sharding. As the cache becomes the key architecture component, ensuring availability with Redis sentinel or Redis cluster becomes critical.

Self-managing OSS Redis on Amazon Elastic Compute Cloud (

Fully managed cache in the cloud – Amazon ElastiCache

Amazon ElastiCache is a fully managed service that makes it easy to deploy, operate, and scale a

Reduction of TCO

By moving their workloads to ElastiCache, businesses can leverage online scaling, automated backups, resiliency with auto-failover, in-built monitoring and alerting without any overhead of underlying resource management. This can significantly reduce the time and resources needed to manage OSS Redis workloads while improving availability.

Example Workload – Lets take a look at a workload and compare the cost of implementing it with self-managed on-premise deployment of OSS Redis versus ElastiCache. Anycompany operates a retail e-commerce application, and their product catalog plays a crucial role in driving sales. To improve performance and user experience, they need to cache the product catalog and user data for quicker responses.

Infrastructure overhead : As the company expanded its product offerings, the cache size grew resulting in scaling their infrastructure to a clustered Redis implementation with read replicas. This allowed them to meet the throughput needs that varied from a few hundred requests per second to tens of thousands during holidays, while ensuring availability. This drove the need to provision the environment upfront to handle peak traffic, resulting in an unused capacity during off-peak periods and unnecessary increase in the total cost incurred.

Infrastructure security, compliance and auditing overhead : As the improved user experience and growth led to the adoption of OSS Redis across other services such as cart management and checkout, Anycompany needed to implement strong security practices to safeguard sensitive user information and credit data. The increased compliance requirements on infrastructure for these audits and certifications resulted in additional costs related to compliance and auditing.

Overhead of monitoring and alerting : When the OSS Redis cluster had intermittent or prolonged node failures, Anycompany had to rebuild the instances and rehydrate cache resulting in revenue losses. This drove additional costs for monitoring and alerting software, and for operations setup and support resources to handle failures.

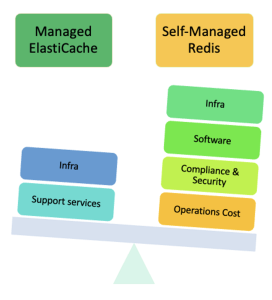

When self-managing workloads, enterprises need to consider infrastructure and core software, infrastructure compliance, security, and operational costs. With managed ElastiCache, the costs mainly include the cost of the cache instances and cloud support services. Elasticache helped Anycompany scale in or out in sync with usage patterns. As a result, they decided to move their workloads to ElastiCache which removed the additional infrastructure, compliance and monitoring costs. This allowed them to simplify their operations.

Key ElastiCache features that help with Cost optimization

ElastiCache offers several features that can enable companies to efficiently scale their applications, reduce costs and drive growth.

High Availability

: ElastiCache is highly available (99.99% SLA when running multi-AZ) and resilient, with built-in failover and data replication capabilities. This reduces the risk of downtime and its associated costs, such as lost revenue and damage to reputation. With a

Scalability : With Amazon ElastiCache for Redis, you can start small and easily scale your clusters as your application grows. ElastiCache offers a large variety of instance types and configuration including support for Graviton instances. It is designed to support online cluster resizing to scale-out, scale-in and online rebalance your Redis clusters without downtime. ElastiCache’s Autoscaling allows deploying resources and nodes in accordance to traffic patterns, thereby ensuring less resource consumption for the same workload, and allowing provisioning per need. This avoids the cost incurred in provisioning resources upfront to handle future peaks in demand.

Monitoring

: ElastiCache provides enhanced visibility via

Extreme Performance (price/performance)

: ElastiCache’s

Data Tiering

: When workloads have large memory footprint,

Security and Compliance:

ElastiCache is a PCI compliant, HIPAA eligible, FedRAMP authorized

Reserved Instances (RIs):

Lastly, ElastiCache provides a cost-effective pricing model with

In summary, the features below highlight the heavy lifting Elasticache provides helping customers optimize costs

| Feature | Self-managed OSS Redis | Amazon ElastiCache |

| Autoscaling | No | Yes |

| Data-tiering | No | Yes |

| Availability | Redis Sentinel or Cluster with manual intervention. | 99.99% with fast failover and automatic recovery. |

| Compliance | Additional price and effort | SOC1, SOC2, SOC3, ISO, MTCS, C5, PCI-DSS, HIPAA |

| Enhanced security | No | Yes |

| Online horizontal scaling | No | Yes |

| Monitoring | External tools | Native CloudWatch integration and aggregated metrics. |

| Enhanced I/O Multiplexing | No | Yes |

Conclusion

Amazon ElastiCache for Redis is fully compatible with the open-source version providing security, compliance, high-availability, and reliability while optimizing costs. As explained in this post, managing OSS Redis can be tedious. ElastiCache eliminates the undifferentiated heavy lifting associated with all the administrative tasks (including monitoring, patching, backups, and automatic failover). You get the ability to automatically scale and resize your cluster to terabytes of data. The benefits of managed ElastiCache allow you to optimize overall TCO and focus on your business and your data instead of your operations. To learn more about ElastiCache, visit

About the authors

Sashi Varanasi

is a Worldwide leader for Specialist Solutions architecture, In-Memory and Blockchain Data services. She has 25+ years of IT Industry experience and has been with Amazon Web Services since 2019. Prior to Amazon Web Services, she worked in Product Engineering and Enterprise Architecture leadership roles at various companies including Sabre Corp, Kemper Insurance and Motorola.

Sashi Varanasi

is a Worldwide leader for Specialist Solutions architecture, In-Memory and Blockchain Data services. She has 25+ years of IT Industry experience and has been with Amazon Web Services since 2019. Prior to Amazon Web Services, she worked in Product Engineering and Enterprise Architecture leadership roles at various companies including Sabre Corp, Kemper Insurance and Motorola.

Lakshmi Peri

is a Sr, Solutions Architect specialized in NoSQL databases. She has more than a decade of experience working with various NoSQL databases and architecting highly scalable applications with distributed technologies. In her spare time, she enjoys traveling to new places and spending time with family.

Lakshmi Peri

is a Sr, Solutions Architect specialized in NoSQL databases. She has more than a decade of experience working with various NoSQL databases and architecting highly scalable applications with distributed technologies. In her spare time, she enjoys traveling to new places and spending time with family.

The mentioned AWS GenAI Services service names relating to generative AI are only available or previewed in the Global Regions. Amazon Web Services China promotes AWS GenAI Services relating to generative AI solely for China-to-global business purposes and/or advanced technology introduction.