We use machine learning technology to do auto-translation. Click "English" on top navigation bar to check Chinese version.

Sending and receiving webhooks on Amazon Web Services: Innovate with event notifications

This post is written by Daniel Wirjo, Solutions Architect, and Justin Plock, Principal Solutions Architect.

Commonly known as reverse APIs or push APIs , webhooks provide a way for applications to integrate to each other and communicate in near real-time. It enables integration for business and system events.

Whether you’re building a software as a service (SaaS) application integrating with your customer workflows, or transaction notifications from a vendor, webhooks play a critical role in unlocking innovation, enhancing user experience, and streamlining operations.

This post explains how to build with webhooks on Amazon Web Services and covers two scenarios:

- Webhooks Provider: A SaaS application that sends webhooks to an external API.

- Webhooks Consumer: An API that receives webhooks with capacity to handle large payloads.

It includes high-level reference architectures with considerations, best practices and

Sending webhooks

To send webhooks, you generate events, and deliver them to third-party APIs. These events facilitate updates, workflows, and actions in the third-party system. For example, a payments platform (provider) can send notifications for payment statuses, allowing ecommerce stores (consumers) to ship goods upon confirmation.

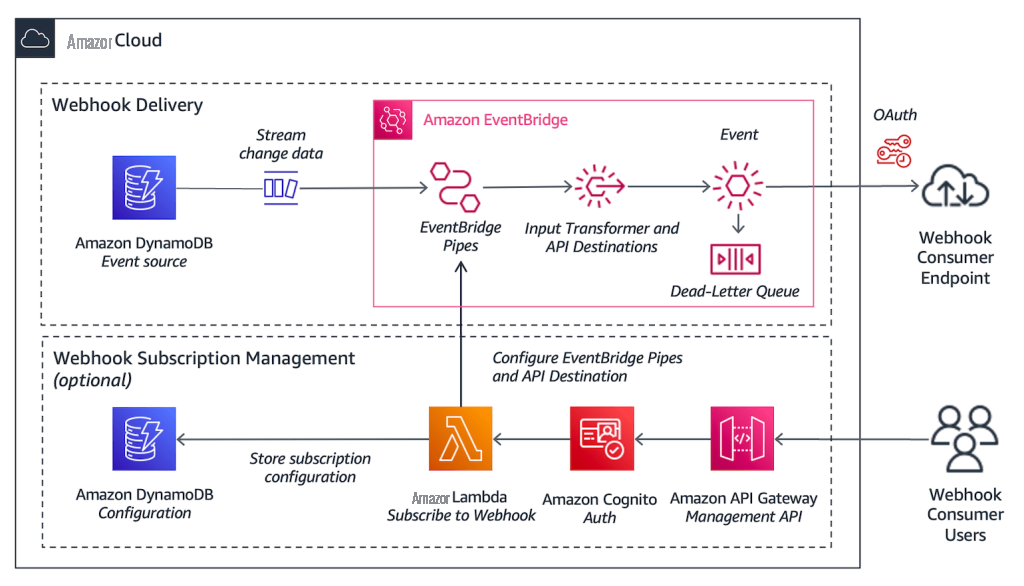

Amazon Web Services reference architecture for a webhook provider

The architecture consists of two services:

- Webhook delivery: An application that delivers webhooks to an external endpoint specified by the consumer.

- Subscription management : A management API enabling the consumer to manage their configuration, including specifying endpoints for delivery, and which events for subscription.

Considerations and best practices for sending webhooks

When building an application to send webhooks, consider the following factors:

Event generation:

Consider how you generate events. This example uses

With EventBridge, you send events in near real time. If events are not time-sensitive, you can send multiple events in a batch. This can be done by polling for new events at a specified frequency using

Filtering:

EventBridge Pipes support

Delivery:

Payload Structure:

Consider how consumers process event payloads. This example uses an

Payload Size: For fast and reliable delivery, keep payload size to a minimum. Consider delivering only necessary details, such as identifiers and status. For additional information, you can provide consumers with a separate API. Consumers can then separately call this API to retrieve the additional information.

Security and Authorization:

To deliver events securely, you establish a

Subscription Management:

Consider how consumers can manage their subscription, such as specifying HTTPS endpoints and event types to subscribe. DynamoDB stores this configuration.

Costs: In practice, sending webhooks incurs cost, which may become significant as you grow and generate more events. Consider implementing usage policies, quotas, and allowing consumers to subscribe only to the event types that they need.

Monetization: Consider billing consumers based on their usage volume or tier. For example, you can offer a free tier to provide a low-friction access to webhooks, but only up to a certain volume. For additional volume, you charge a usage fee that is aligned to the business value that your webhooks provide. At high volumes, you offer a premium tier where you provide dedicated infrastructure for certain consumers.

Monitoring and troubleshooting: Beyond the architecture, consider processes for day-to-day operations. As endpoints are managed by external parties, consider enabling self-service. For example, allow consumers to view statuses, replay events, and search for past webhook logs to diagnose issues.

Advanced Scenarios:

This example is designed for popular use cases. For advanced scenarios, consider alternative

Receiving webhooks

To receive webhooks, you require an API to provide to the webhook provider. For example, an ecommerce store (consumer) may rely on notifications provided by their payment platform (provider) to ensure that goods are shipped in a timely manner. Webhooks present a unique scenario as the consumer must be scalable, resilient, and ensure that all requests are received.

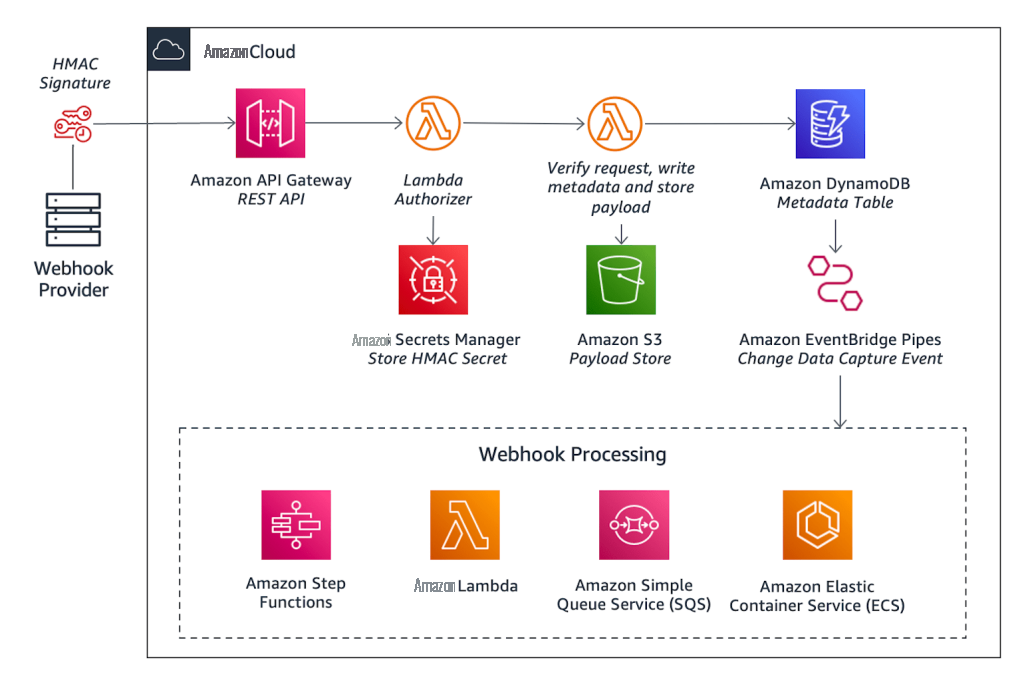

Amazon Web Services reference architecture for a webhook consumer

In this scenario, consider an advanced use case that can handle large payloads by using the

At a high-level, the architecture consists of:

- API: An API endpoint to receive webhooks. An event-driven system then authorizes and processes the received webhooks.

- Payload Store: S3 provides scalable storage for large payloads.

-

Webhook Processing:

EventBridge Pipes provide an extensible architecture for processing. It can

batch ,filter ,enrich , and send events to a range of processing services astargets .

Considerations and best practices for receiving webhooks

When building an application to receive webhooks, consider the following factors:

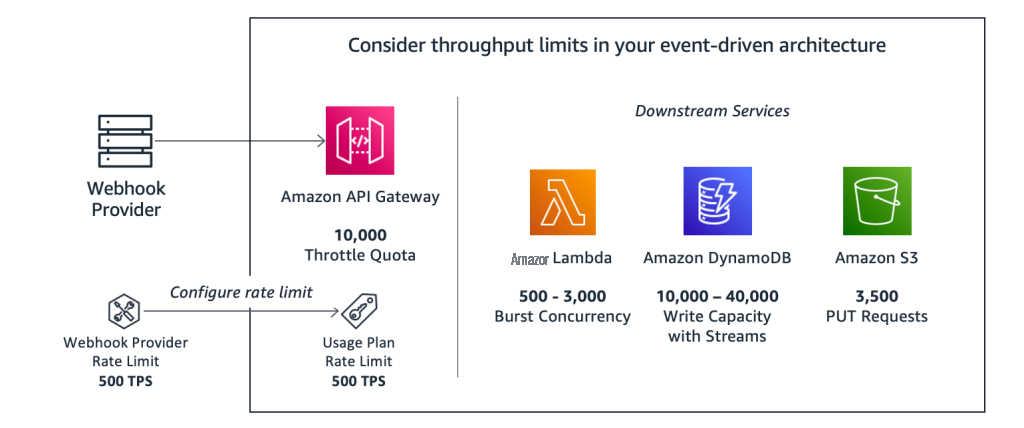

Scalability

: Providers typically send events as they occur. API Gateway provides a scalable managed endpoint to receive events. If unavailable or throttled, providers may retry the request, however, this is not guaranteed. Therefore, it is important to

In addition, API Gateway allows you to

Authorization and Verification : Providers can support different authorization methods. Consider a common scenario with Hash-based Message Authentication Code (HMAC), where a shared secret is established and stored in Secrets Manager. A Lambda function then verifies integrity of the message, processing a signature in the request header. Typically, the signature contains a timestamped nonce with an expiry to mitigate replay attacks, where events are sent multiple times by an attacker. Alternatively, if the provider supports OAuth, consider securing the API with Amazon Cognito.

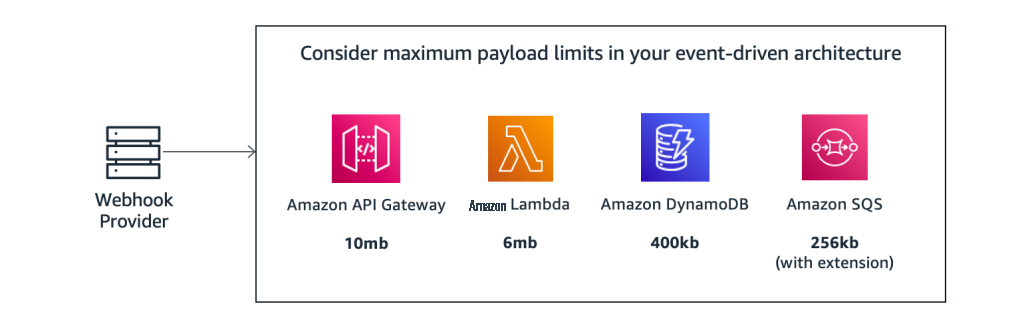

Payload Size

: Providers may send a variety of payload sizes. Events can be batched to a single larger request, or they may contain significant information. Consider payload size limits in your event-driven system. API Gateway and Lambda have limits of

Instead of processing the entire payload, S3 stores the payload. It is then referenced in DynamoDB, via its bucket name and object key. This is known as the

Idempotency : For reliability, many providers prioritize delivering at-least-once , even if it means not guaranteeing exactly once delivery. They can transmit the same request multiple times, resulting in duplicates. To handle this, a Lambda function checks against the event’s unique identifier against previous records in DynamoDB. If not already processed, you create a DynamoDB item.

Ordering

: Consider processing requests in its intended order. As most providers prioritize

at-least-once

delivery, events can be out of order. To indicate order, events may include a timestamp or a sequence identifier in the payload. If not, ordering may be on a best-efforts basis based on when the webhook is received. To handle ordering reliably, select event-driven services that ensure ordering. This example uses

Flexible Processing

: EventBridge Pipes provide integrations to a range of event-driven services as

Costs: This example considers a use case that can handle large payloads. However, if you can ensure that providers send minimal payloads, consider a simpler architecture without the claim-check pattern to minimize cost.

Conclusion

Webhooks are a popular method for applications to communicate, and for businesses to collaborate and integrate with customers and partners.

This post shows how you can build applications to send and receive webhooks on Amazon Web Services. It uses serverless services such as EventBridge and Lambda, which are well-suited for event-driven use cases. It covers high-level reference architectures, considerations, best practices and

For standards and best practices on webhooks, visit the open-source community resources

For more serverless learning resources, visit

The mentioned AWS GenAI Services service names relating to generative AI are only available or previewed in the Global Regions. Amazon Web Services China promotes AWS GenAI Services relating to generative AI solely for China-to-global business purposes and/or advanced technology introduction.